The SmartData@PoliTO center focuses on Big Data technologies, and Data Science approaches.

Scope and goals – The world is producing more data than ever, and we now have the power to collect, store, process and model this humongous amount of data. This is changing the way problems are approached, and more and more phenomenological models are being complemented by data driven approaches. Big data and data science are interdisciplinary fields addressing the extraction of knowledge and value from data

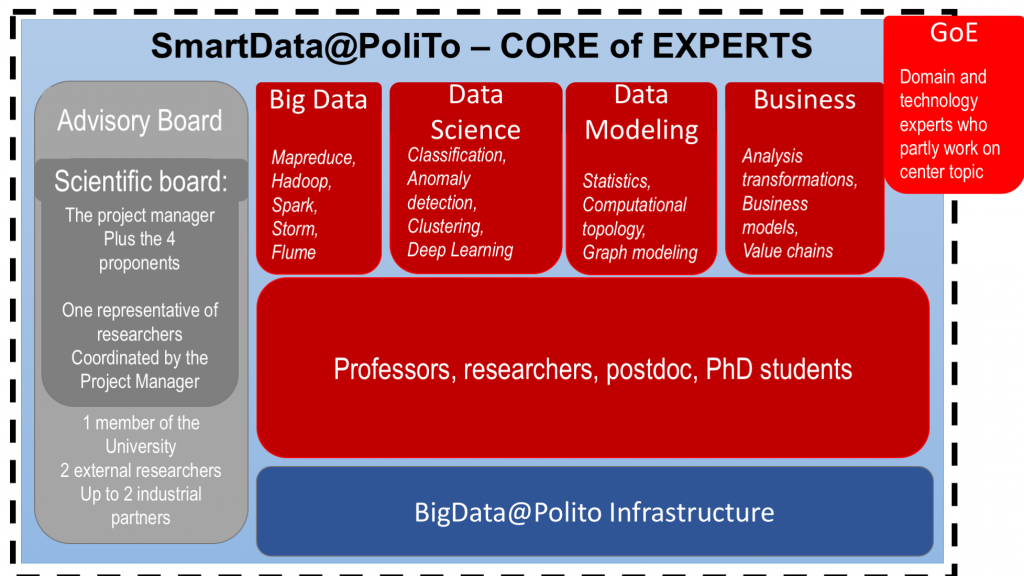

SmartData — Machine learning, data mining, statistics, signal processing, graph modeling are among the fundamentals of big data and data science. Only if well-matched, data scientists and domain experts have the power to make data “smart”. SmartData@PoliTO mixes these fundamentals by coupling data, applications, and the specialized knowledge of technical and business domains. The core of SmartData@PoliTO is made of scholars specialized on algorithms, computing architecture, applications and management.

Multidisciplinary and open – The center is formed by Experts with multidisciplinary competences. 29 professors from 7 areas are involved in the center. About half of them form the Core of Experts. The other half form the Group of Experts, acting as links with companies, other centers and labs to setup structured collaborations. Multidisciplinary in nature, the center is open to expanded collaborations. This multidisciplinary and transversal approach makes SmartData@PoliTO radically different from other initiatives in Italian universities, which keep the “vertical” shape forced by departmental structures.

Covered topics — The center focuses on interdisciplinary competences spanning from fundamental problems of data science to applications on different domains. Topics covered by the laboratory are in the field of big data collection, storage and processing, data science and data mining in general, and business models and practices applied to big data. They can be coarsely grouped into 3 areas:

- Algorithms and methodologies for data analysis, with interest on big data processing, data mining, deep learning, and supervised and unsupervised machine learning, such as rule mining, classification and predictions, anomaly detection, clustering.

- Methodologies for data modeling based on statistics, computational topology and geometry with application to data analysis, and study of hybrid systems that merge big data with simulation approaches.

- Study the transformations in industrial structures, value chains, business models and managerial practices due to the greater availability of big data.

Applications — The center has identified the following areas of applications:

- Predictive maintenance: Historical data allow the definition of models to detect failures in advance, and implement appropriate strategies to reduce maintenance operations. Big data plays a key role, given the system complexity makes it almost impossible to use phenomenological models.

- Internet & Cybersecurity: Internet is the biggest source of big data, and is constantly generating cyberthreats, with IoT scaling the challenge to humongous sizes. Network management and security involves the study of traffic, with anomaly detection algorithms applied for cybersecurity threats, detection and countermeasures design.

- Mobility analysis: The heterogeneity of user habits, taste and social interactions make the understanding of customers’ needs a challenge. Analysis and modeling of data coming from different platforms is key to provide new offerings, improve customer satisfaction, and system processes.